The pre-requisite is to make sure that you have requested for Stream Analytics preview if you have not already done so.

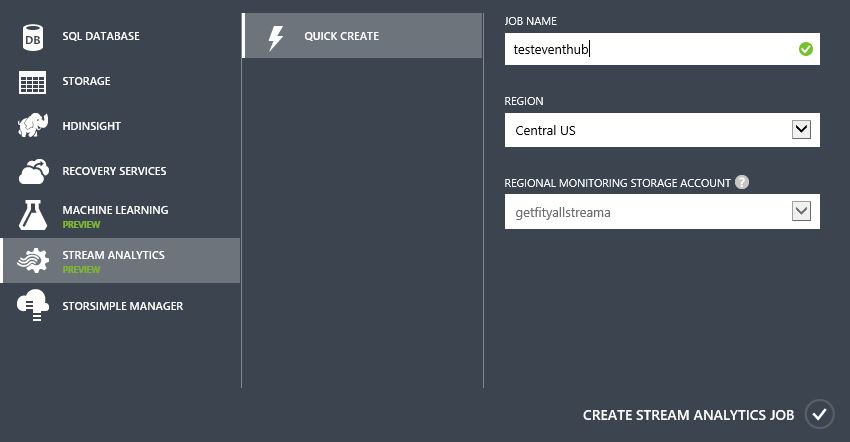

1. Create a Stream Analytics job. Jobs can only be created in 2 regions while the service is under preview.

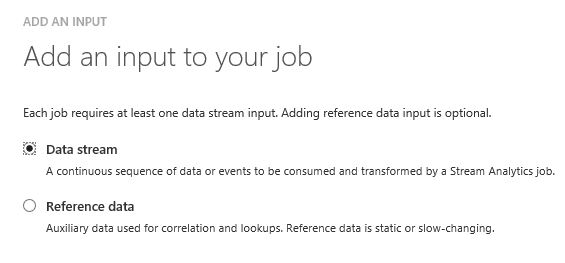

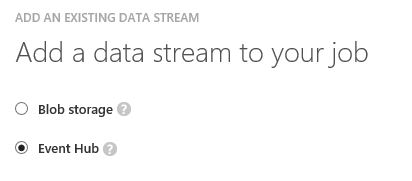

2. Add an input to your job., This is the best part because we get to tap into an Event Hub to analyse the continuous sequence of event data stream and potentially transform it by the same job.

3. Select Event Hub.

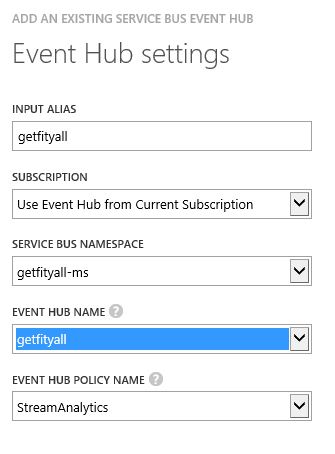

4. Configure the event hub settings. You could also tap into an event hub from a different subscription.

4. Configure the event hub settings. You could also tap into an event hub from a different subscription.

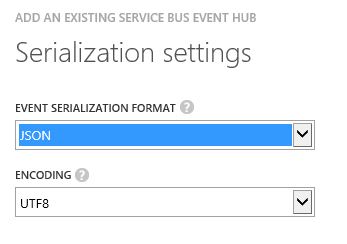

5. Due to my event hub’s event data being serialized in JSON format, this is exactly what I will select in the following step.

Under the Query tab, I just insert a simple query like the following.

SELECT * FROM getfityall

I’m not doing any transformation yet, I just want to make sure that the event data sent by my Raspberry Pi via Event Hub’s REST API is done properly.

Next on the list of steps is to setup the output in the job.

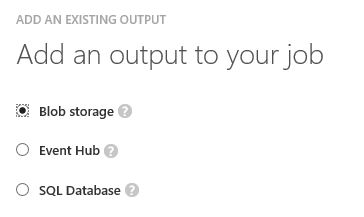

6. Add an output to your job. I’m using a BLOB storage just to keep things simple so that I could use open the CSV file in Excel to take a look at the data stream.

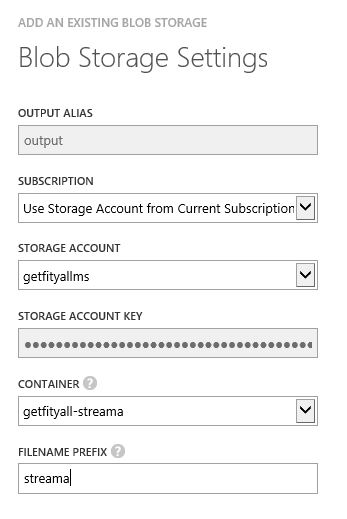

7. Setup the BLOB storage settings.

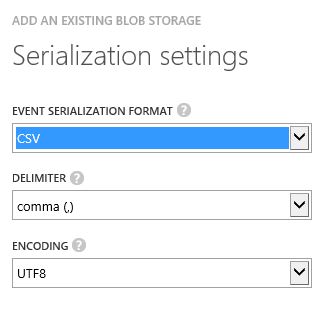

8. Specify the serialization settings. I’m choosing CSV for obvious reason stated above.

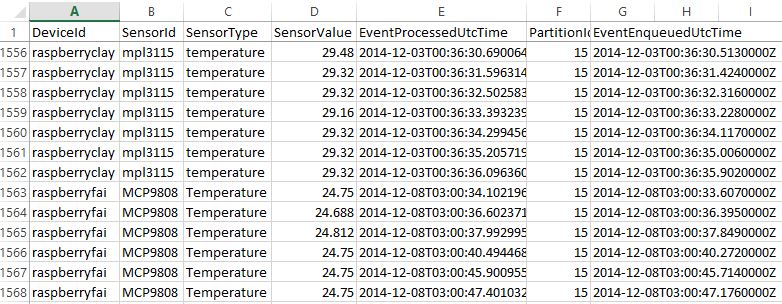

As I pump telemetry data from my Raspberry Pi, I could see my CSV file created/updated. Just go to the container view in your BLOB storage, and download the CSV file.

Below is what my event data stream looks like. It shows event data points captured from two Raspberry Pis, one using the MPL3115 temperature sensor (part of the Xtrinsic sensor board), and another using MCP9808 temperature sensor. The fun begins as I could write some funky transformation logic in the query and do some real-time complex event processing.